Sheng Cheng

I am a researcher at Amazon AGI. Before joining Amazon, I was a PhD student at ASU. During my PhD, I worked with Yezhou Yang. Previously, I received my M.Eng. in Electrical Engineering from the University of Illinois at Urbana-Champaign, working with Ruoyu Sun, and received B.S. from Huazhong University of Science and Technology.

Research

I work on Multimodal LLM at Amazon, previously on video generation. My previous focus lay in the areas of vision & language, vision generalization & robustness, and AI in Science.

Publications

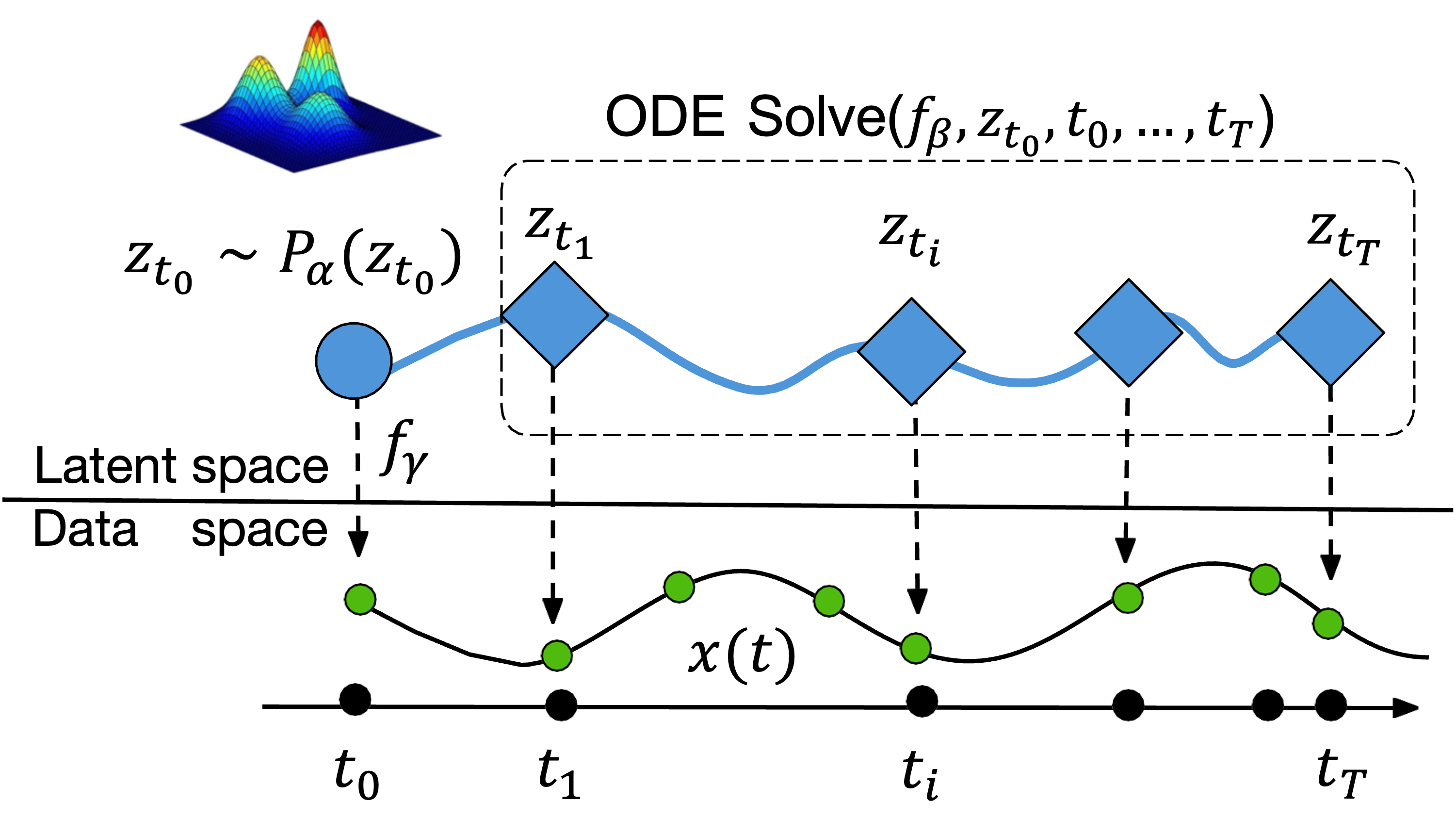

Sheng Cheng*, Deqian Kong*, Jianwen Xie, Kookjin Lee, Ying Nian Wu‡, Yezhou Yang‡

TMLR 2025

Integrating energy-based prior model with Neural ODEs for latent space continuous-time sequence data modeling, training using MLE with MCMC instead of inference network.

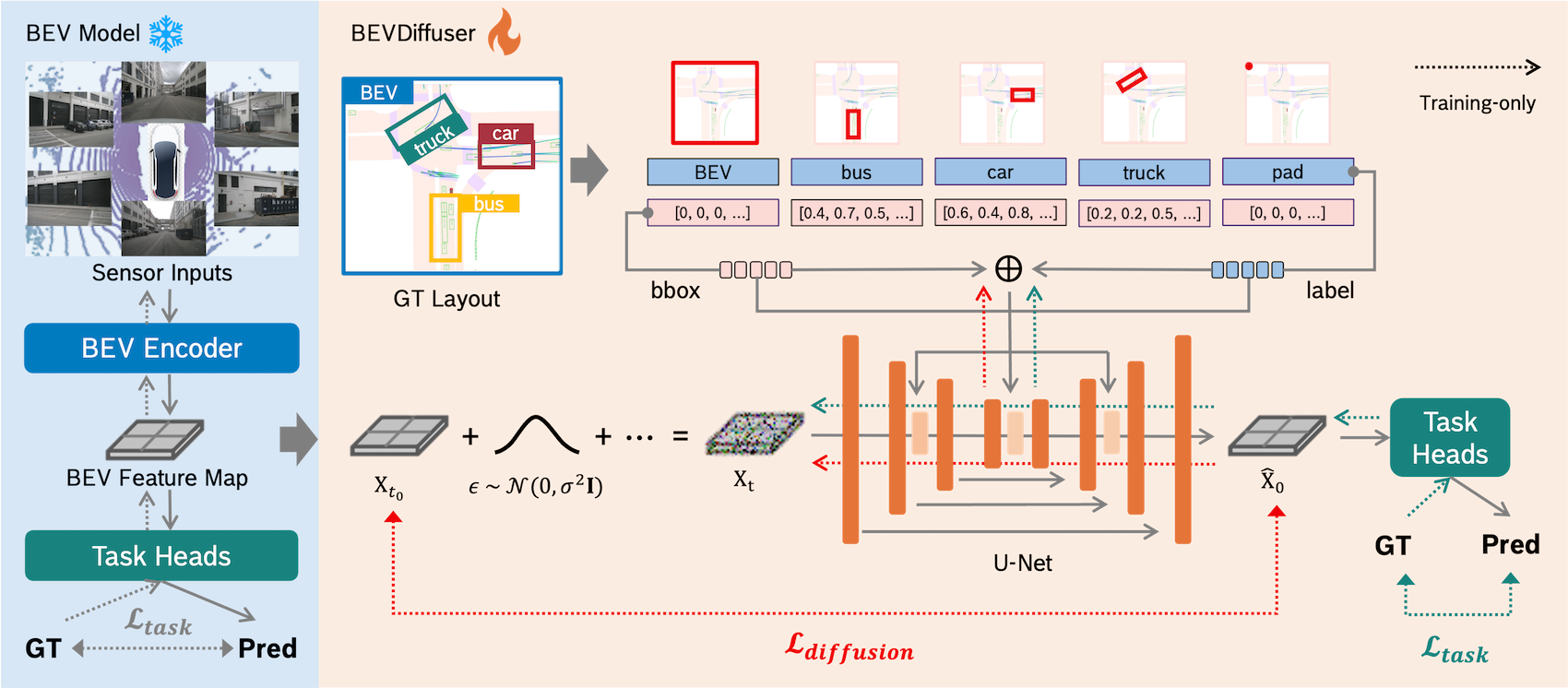

Xin Ye, Burhaneddin Yaman, Sheng Cheng, Feng Tao, Abhirup Mallik, Liu Ren,

CVPR 2025

Project Page / arXiv / Code

Enhancing Bevformer with diffusion model for autonomous driving tasks.

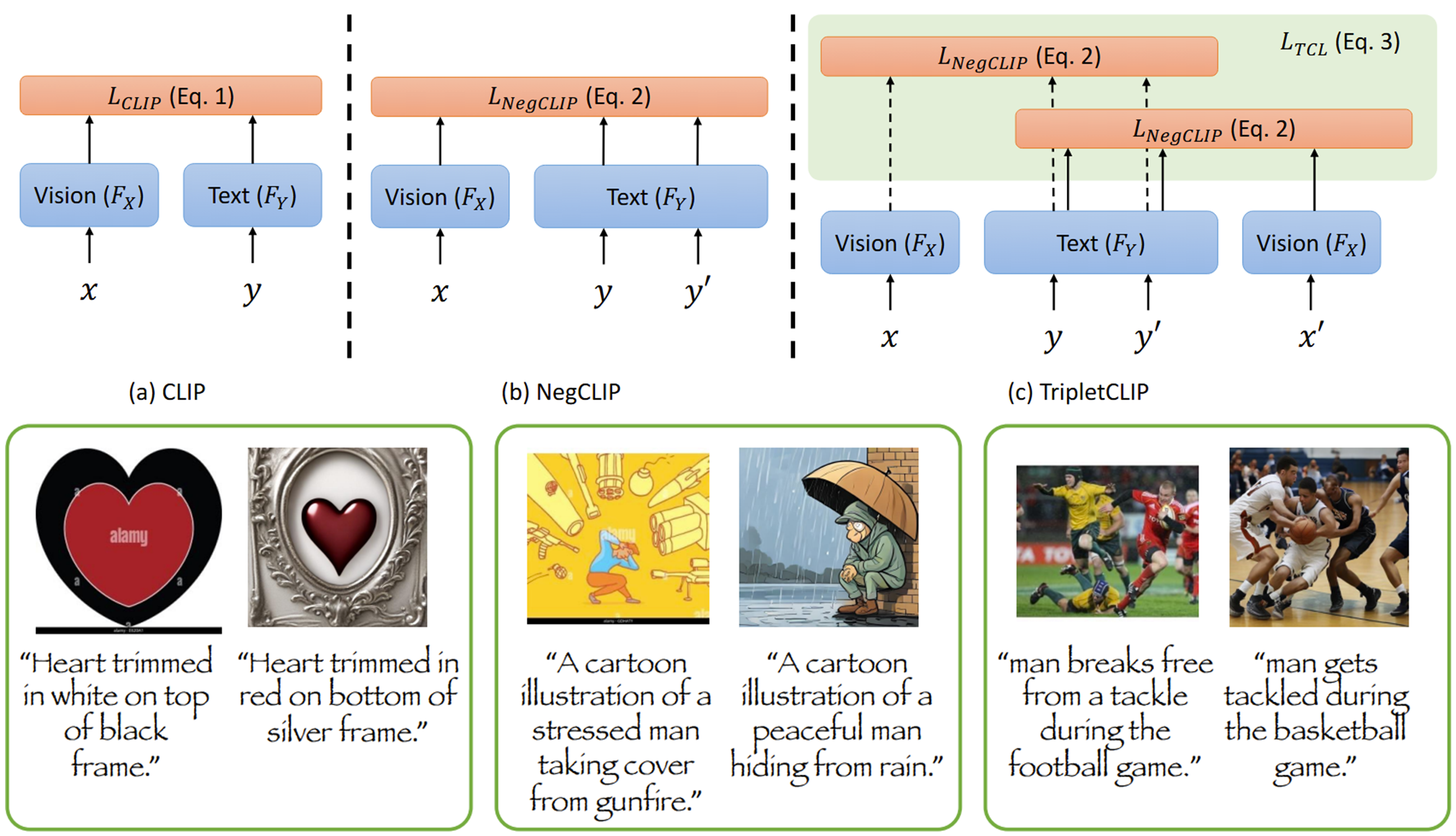

Maitreya Patel, Abhiram Kusumba, Sheng Cheng, Changhoon Kim, Tejas Gokhale, Chitta Baral, Yezhou Yang

NeurIPS 2024

Project Page / arXiv / Code

We enhance CLIP models by generating "hard" negative captions and images to improve their compositional reasoning ability.

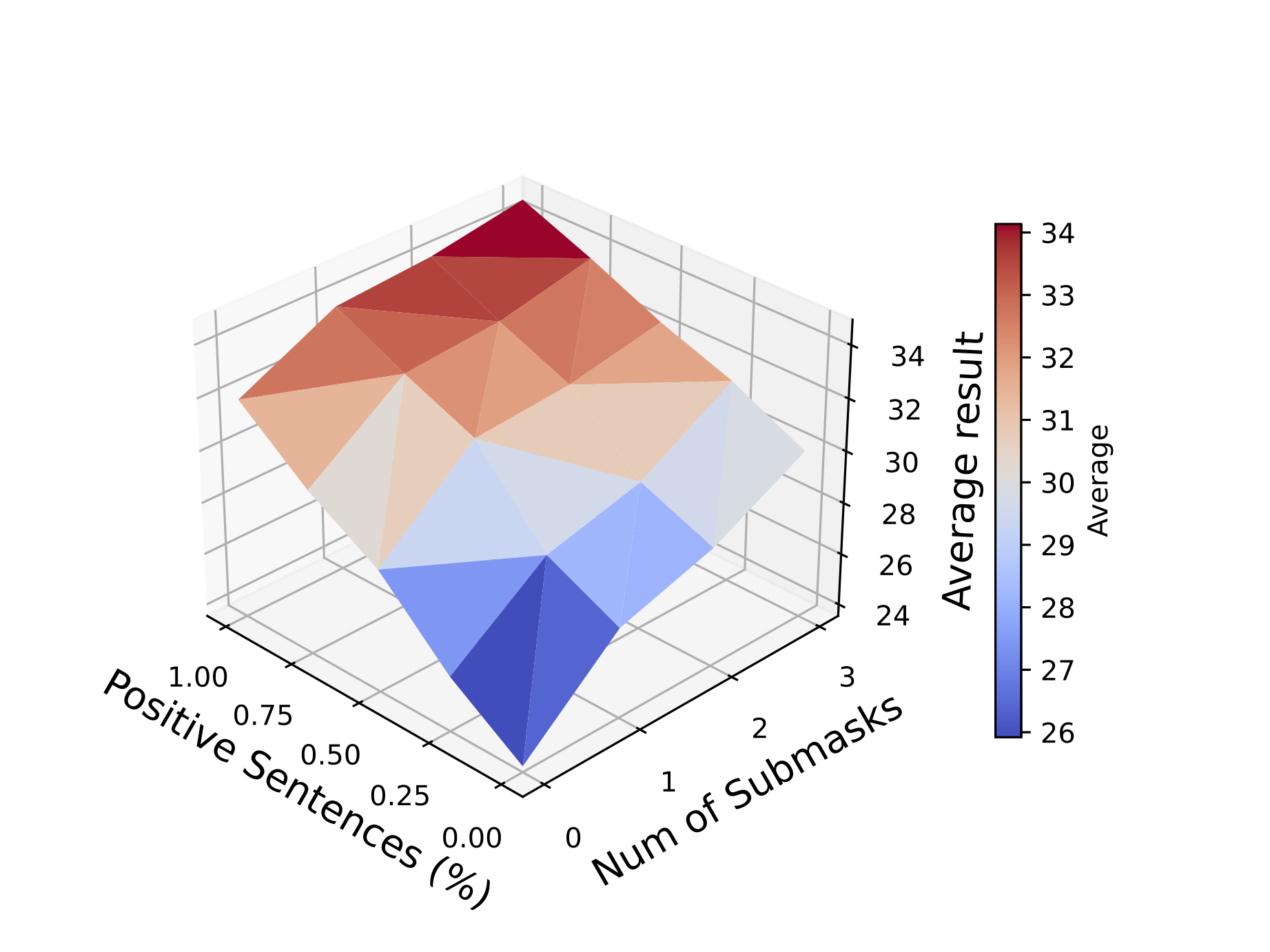

Sheng Cheng, Maitreya Patel, Yezhou Yang

EMNLP 2024, Findings

We analyze the impact of precision and recall in human-annotated and synthetic captions on the training text-to-image models.

Maitreya Patel, Changhoon Kim, Sheng Cheng, Chitta Baral, Yezhou Yang

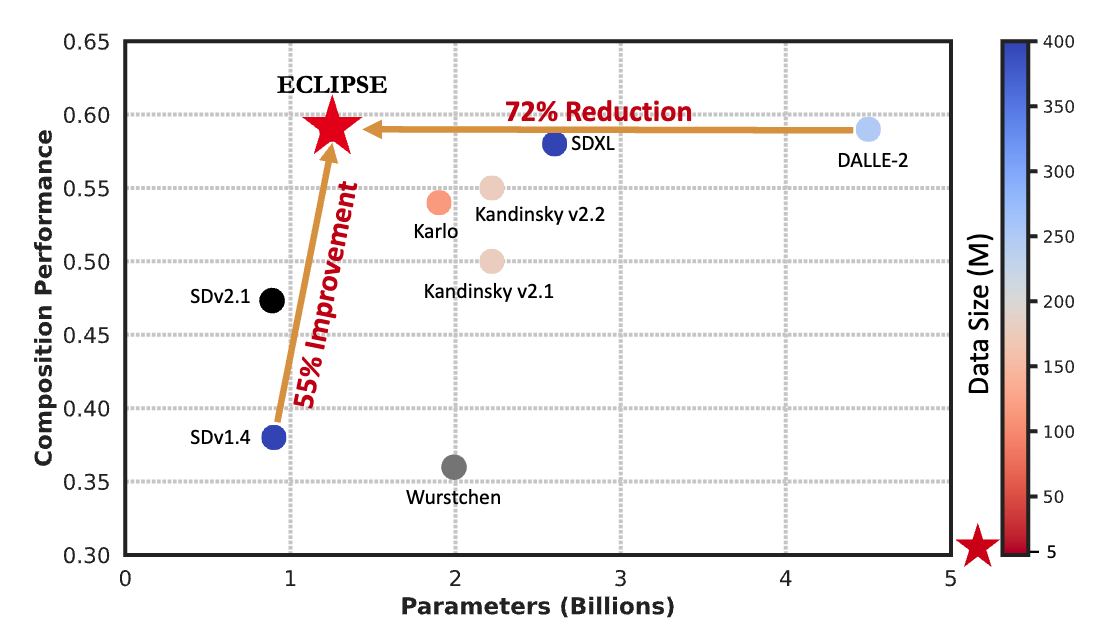

CVPR 2024

Project Page / Demo / arXiv / Code / Media coverage ( Twitter of AK, MarkTechPost, MultiPlatformAI, Video discussion, Paper Digest)

Improving the Parameter and Data Efficiency of the Text-to-Image Priors for UnCLIP Family Models with contrastive loss.

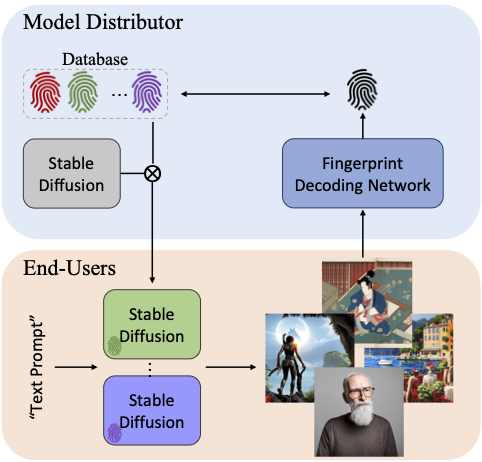

Changhoon Kim, Kyle Min, Maitreya Patel, Sheng Cheng, Yezhou Yang

CVPR 2024

Project Page / Demo / arXiv

Enabling the Integration of up to 32-bit (~4 billion) fingerprints into Text-to-Image Diffusion Models without loss in image quality.

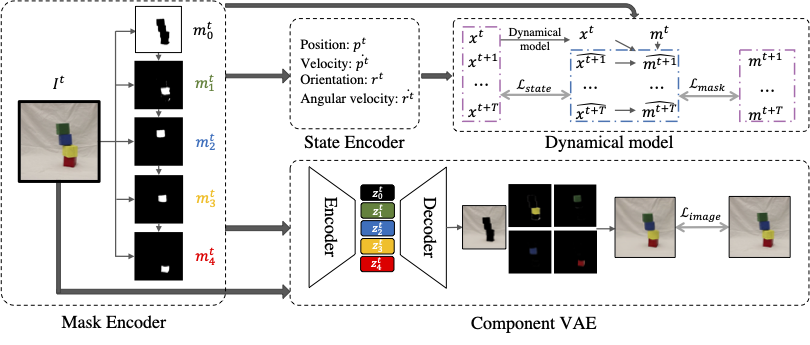

Sheng Cheng, Yezhou Yang, Yang Jiao, Yi Ren

NeurIPS AI4Science workshop, 2023

Jointly learning to discover physical objects and predict their dynamics in the videos for physical environment.

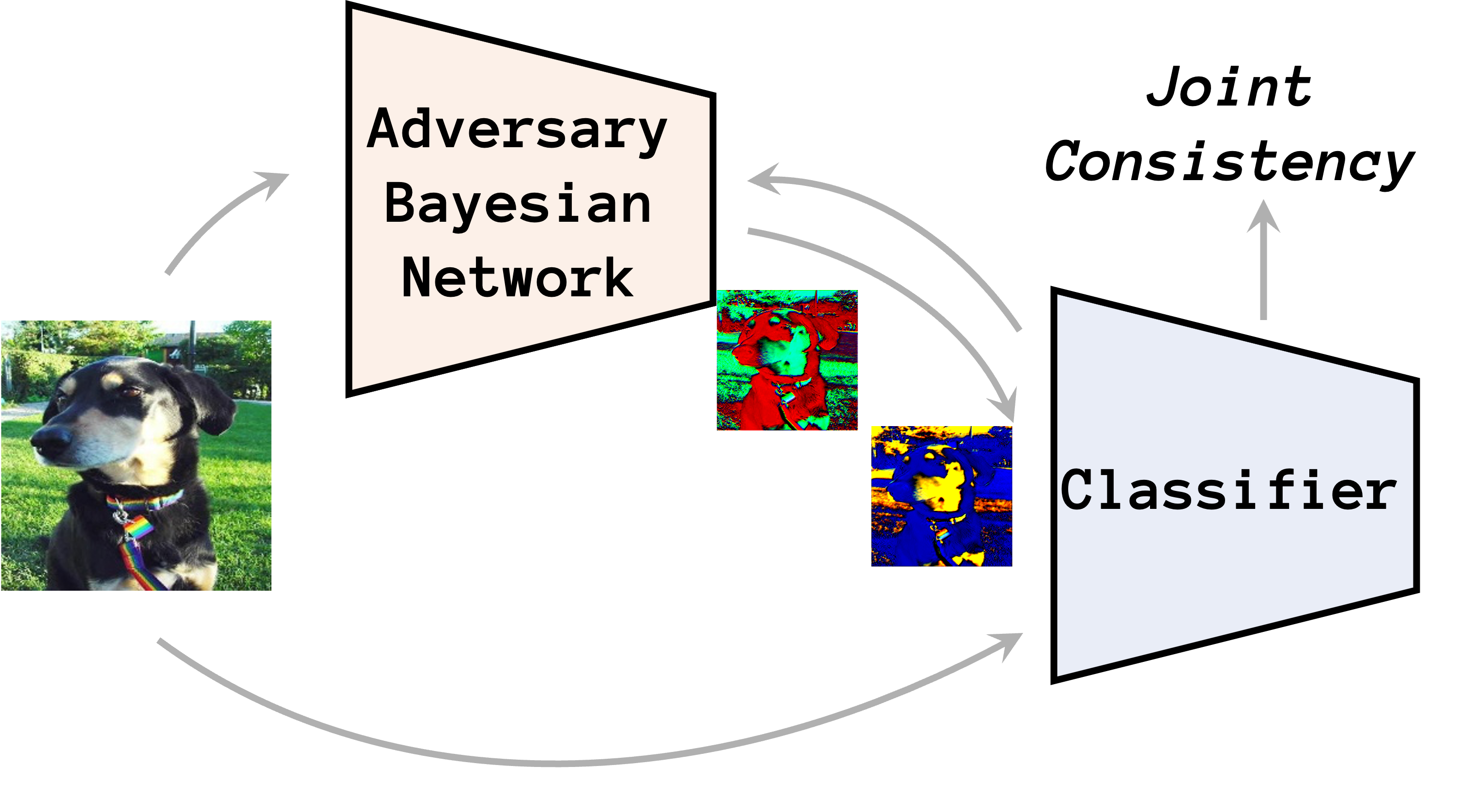

Sheng Cheng, Tejas Gokhale, Yezhou Yang

ICCV 2023

Adversarial Learning + Bayesian neural network for single-source domain generalization.

Sheng Cheng, Yi Ren, Yezhou Yang

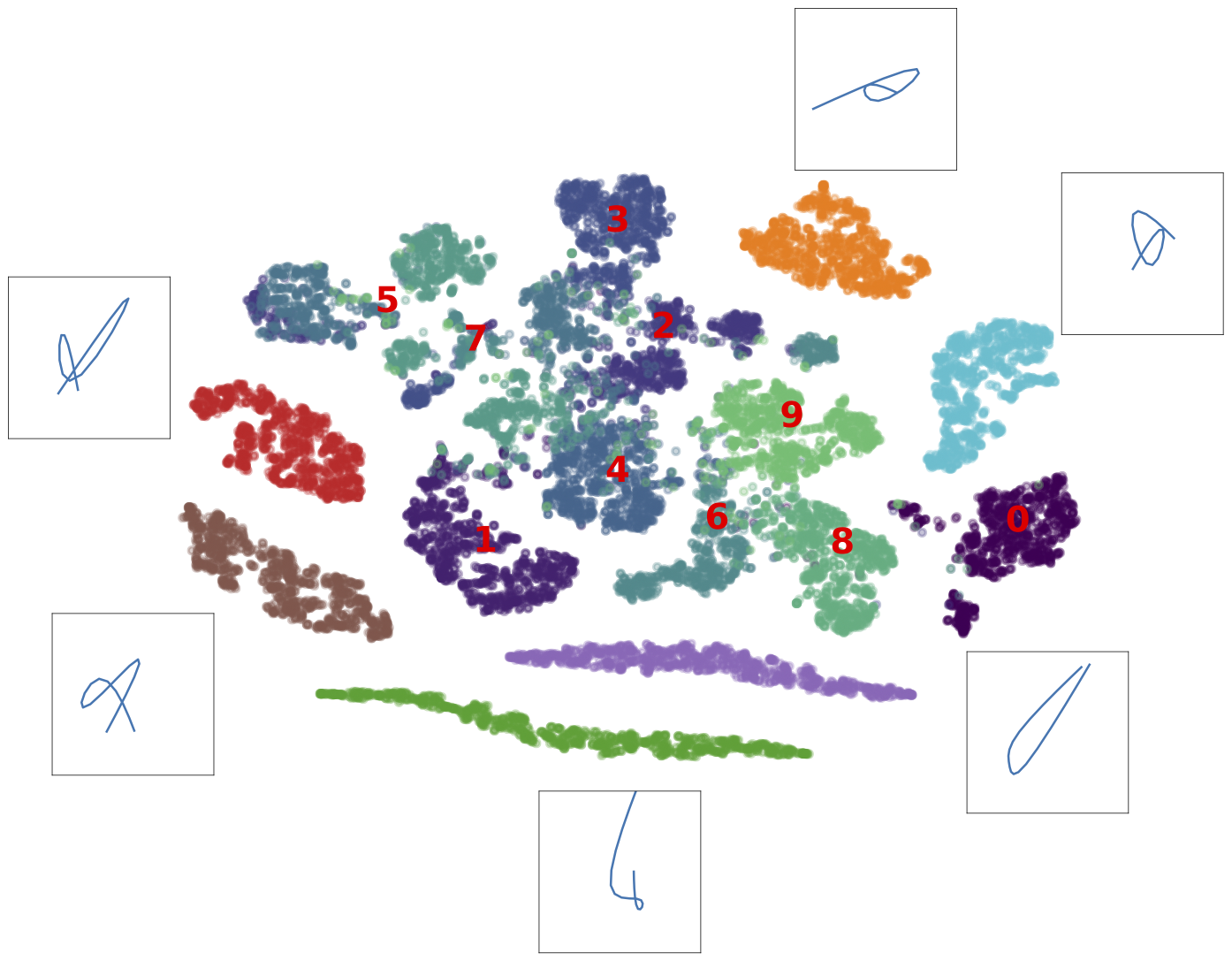

CVPR Sketch workshop, 2022

Transformation invariant sketch recognition by decomposing to strokes and composing by graph neural network.

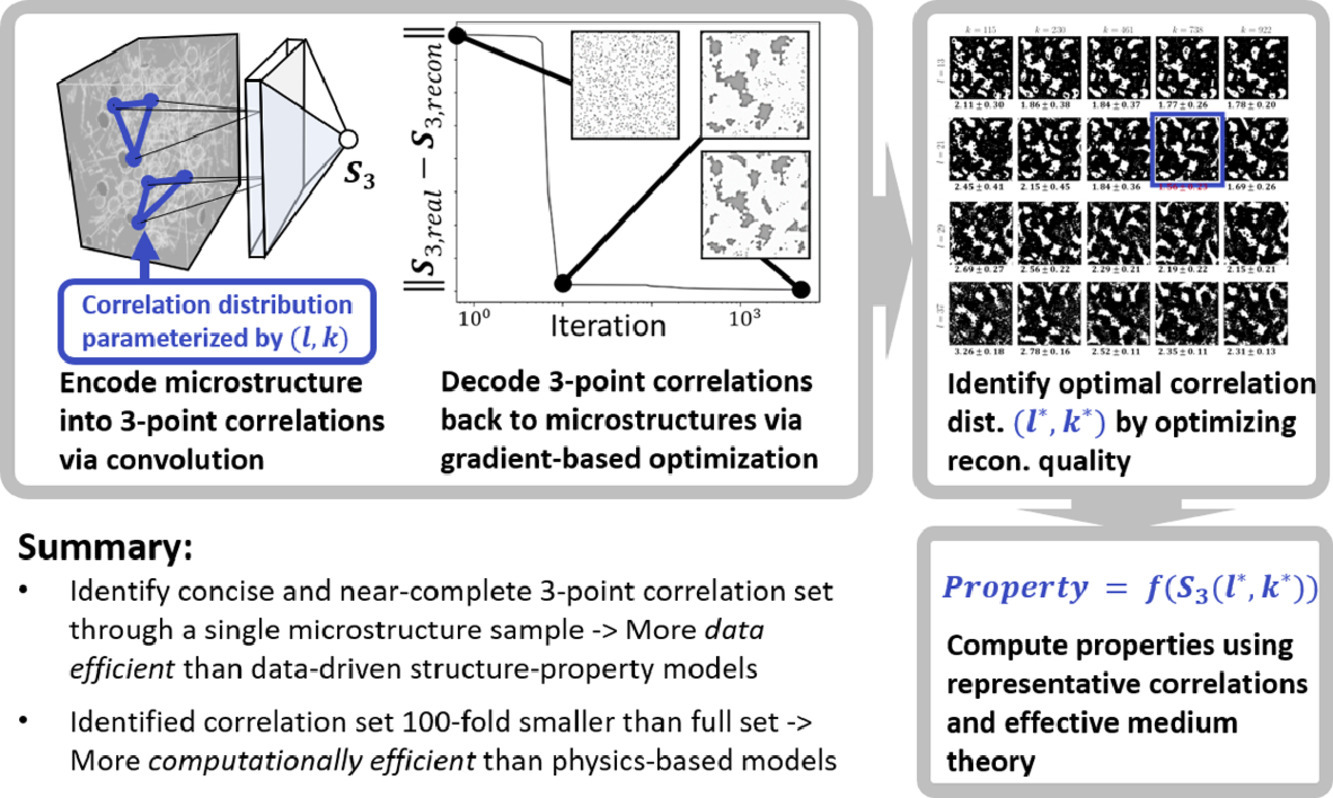

Sheng Cheng, Yang Jiao, Yi Ren

Acta Materialia, 2022

Learning the microstructure representation by 3-point correlation functions.

Yutian Pang, Sheng Cheng, Jueming Hu, Yongming Liu

CVPR Adversarial Machine Learning workshop, 2021

Evaluating the robustness gain of Bayesian neural networks on image classification tasks.

Work Experience

-

Amazon AGI, Fab 2025 - Now

- Image/Video generation

-

Amazon AWS, Fall 2024

- Mitigating hallucinations in MLLMs using hard negative samples.

-

Bosch US, Summer 2024

- Masked controlled autonomous driving video generation.

-

Amazon Alexa, Summer 2023

- Zero-shot mask annotation free open-vocabulary semantic segmentation by the text-to-image model.

-

UltruFit.ai, Summer 2022

- A real-time system evaluating and scoring the human exercises by cameras.

-

Hikvision Research, Spring 2019

- Research on a new metric for super-resolution based on one-to-many mapping nature.

Service & Honors

- Reviewer: CVPR, NeurIPS, TNNLS, TIP, ICLR

- 2023-24 ASU Graduate College Travel Award

- CVPR 2024 Doctoral Consortium

- Organizer of ASU Frontier Topics in GenAI Seminar